| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | ||||

| 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| 11 | 12 | 13 | 14 | 15 | 16 | 17 |

| 18 | 19 | 20 | 21 | 22 | 23 | 24 |

| 25 | 26 | 27 | 28 | 29 | 30 | 31 |

Tags

- MacOS

- tree.fit

- barh

- DataFrame

- plt

- pandas 메소드

- 자료구조

- numpy

- 조합

- 스터디노트

- 머신러닝

- 등차수열

- pandas

- 순열

- SQL

- 기계학습

- 리스트

- 재귀함수

- INSERT

- 등비수열

- Machine Learning

- matplotlib

- Folium

- maplotlib

- python

- 파이썬

- 통계학

- pandas filter

- Slicing

- 문제풀이

Archives

- Today

- Total

코딩하는 타코야끼

[스터디 노트] Week12_3일차 [6 ~ 7] - ML 본문

728x90

반응형

1. Decision Tree를 이용한 Wine 분석

red_url = "<https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-red.csv>"

white_url = "<https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-white.csv>"

red_wine = pd.read_csv(red_url, sep=";")

white_wine = pd.read_csv(white_url, sep=";")

red_wine.head()

white_wine.head()

⚡️ 레드/화이트 와인별로 등급

fig = px.histogram(wine, x="quality", color="color")

fig.show()

⚡️ 데이터 분리

X = wine.drop(["color"], axis=1)

y = wine["color"]

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2,

stratify=y,

random_state=0)

np.unique(y_train, return_counts=True)

>>>

(array([0, 1]), array([3918, 1279]))

⚡️시각화

# 훈련용과 테스트용이 레드/화이트 와인에 따라 어느정도 구분되었을까

import plotly.graph_objects as go

fig = go.Figure()

fig.add_trace(go.Histogram(x=X_train["quality"], name="Train"))

fig.add_trace(go.Histogram(x=X_test["quality"], name="Test"))

fig.update_layout(barmode="overlay")

fig.update_traces(opacity=0.75)

fig.show()

⚡️ 모델 학습 및 정확성

tree = DecisionTreeClassifier(random_state=0)

tree.fit(X_train, y_train)

X_train_pred = tree.predict(X_train)

X_test_pred = tree.predict(X_test)

print("Train acc : ",accuracy_score(y_train, X_train_pred))

print("Test acc : ",accuracy_score(y_test, X_test_pred))

>>>

Train acc : 0.9998075812969021

Test acc : 0.9830769230769231

2. 데이터 전처리

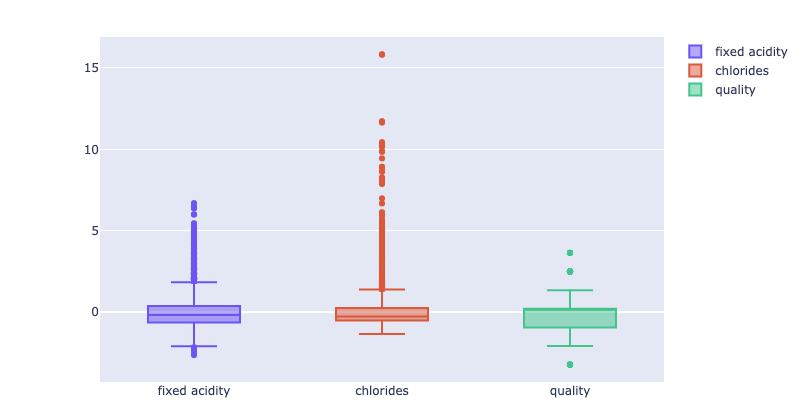

📍 box-lot

def px_box(target_df):

fig = go.Figure()

fig.add_trace(go.Box(y=target_df["fixed acidity"], name="fixed acidity"))

fig.add_trace(go.Box(y=target_df["chlorides"], name="chlorides"))

fig.add_trace(go.Box(y=target_df["quality"], name="quality"))

fig.show()

px_box(X)

- 컬럼들의 최대/최소 범위가 각각 다르고, 평균과 분산이 각각 다르다.

- 특성(feature)의 편향 문제는 최적의 모델을 찾는데 방해가 될 수도 있다.

📍 최적화 변환

from sklearn.preprocessing import MinMaxScaler, StandardScaler

MMS = MinMaxScaler()

SS = StandardScaler()

MMS.fit(X)

SS.fit(X)

X_mms = MMS.transform(X)

X_ss = SS.transform(X)

ss_df = pd.DataFrame(X_ss, columns=X.columns)

mms_df = pd.DataFrame(X_mms, columns=X.columns)

- 결정나무에서는 이런 전처리는 의미를 가지지 않는다.

- 주로 Cost Function을 최적화할 때 유효할 때가 있다.

- MinMaxScaler와 StandardScaler 중 어떤 것이 좋을지는 해봐야 안다.

⚡️ minmaxscaler

# 최대 최소값을 1과 0으로 강제로 맞추는 것

px_box(mms_df)

# MinMaxScaler 적용한 모델

X_train, X_test, y_train, y_test = train_test_split(mms_df, y,

test_size=0.2,

stratify=y,

random_state=0)

tree = DecisionTreeClassifier(random_state=0)

tree.fit(X_train, y_train)

X_train_pred = tree.predict(X_train)

X_test_pred = tree.predict(X_test)

print("Train_acc : ", accuracy_score(y_train, X_train_pred))

print("Test_acc : ", accuracy_score(y_test, X_test_pred))

>>>

Train_acc : 0.9998075812969021

Test_acc : 0.9830769230769231

⚡️ standardscaler

# 평균을 0으로 표준편차를 1로 맞추는 것

px_box(ss_df)

# StandardScaler을 적용한 모델

X_train, X_test, y_train, y_test = train_test_split(ss_df, y,

test_size=0.2,

stratify=y,

random_state=0)

tree = DecisionTreeClassifier(random_state=0)

tree.fit(X_train, y_train)

X_train_pred = tree.predict(X_train)

X_test_pred = tree.predict(X_test)

print("Train_acc : ", accuracy_score(y_train, X_train_pred))

print("Test_acc : ", accuracy_score(y_test, X_test_pred))

>>>

Train_acc : 0.9998075812969021

Test_acc : 0.9830769230769231

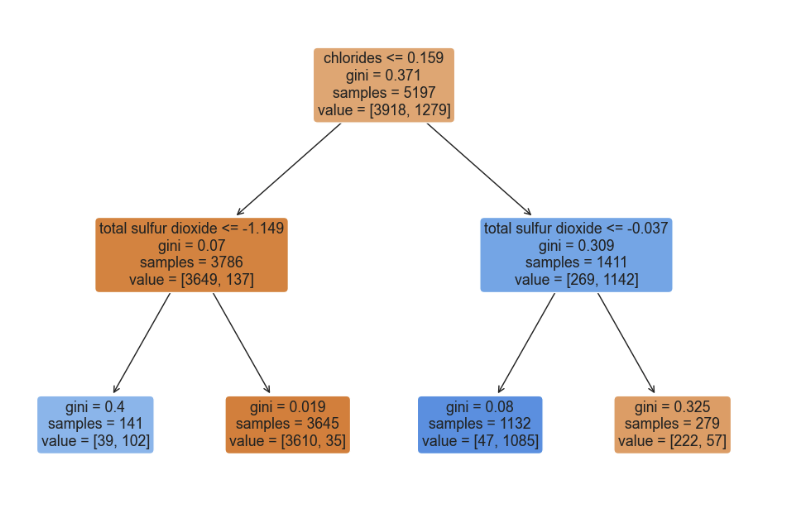

3. 와인 맛에 대한 분류 - 이진 분류

📍quality 컬럼을 이진화 진행

wine["taste"] = [1 if grade>5 else 0 for grade in wine["quality"]]

wine.head()

X = wine.drop(["taste", "quality"], axis=1)

y = wine["taste"]

X_train, X_test, y_train, y_test = train_test_split(X, y,

test_size=0.2,

stratify=y,

random_state=0)

tree = DecisionTreeClassifier(max_depth=2, random_state=0)

tree.fit(X_train, y_train)

X_train_pred = tree.predict(X_train)

X_test_pred = tree.predict(X_test)

print("Train_acc : ", accuracy_score(y_train, X_train_pred))

print("Test_acc : ", accuracy_score(y_test, X_test_pred))

>>>

Train_acc : 0.7379257263806042

Test_acc : 0.71

import sklearn.tree as sk_tree

plt.figure(figsize=(12, 8))

sk_tree.plot_tree(tree, feature_names=X.columns,

rounded=True,

filled=True)

plt.show()

4. PipeLine

- 머신러닝에서 "파이프라인" (Pipeline)은 데이터 전처리, 특징 추출, 모델 학습 및 예측과 같은 연속적인 변환 및 추정 작업을 순차적으로 연결하는 방법을 말합니다.

from sklearn.pipeline import Pipeline

X = wine.drop(["color"], axis=1)

y = wine["color"]

X = wine.drop(["color"], axis=1)

y = wine["color"]

pipe.steps[0]

>>>

('scaler', StandardScaler())

📍 set_params( )

- 스탭이름 “clf” + 언더바 두 개 “- -” + 속성 이름

pipe.set_params(clf__max_depth=2)

# pipe.set_params(clf__random_state=0)

📍 모델 학습 및 추론

X_train, X_test, y_train, y_test = train_test_split(X, y,

test_size=0.2,

stratify=y,

random_state=13

)

pipe.fit(X_train, y_train)

X_train_pred = pipe.predict(X_train)

X_test_pred = pipe.predict(X_test)

train_acc = accuracy_score(y_train, X_train_pred)

test_acc = accuracy_score(y_test, X_test_pred)

print("Train_acc: ", train_acc)

print("Test_acc: ", test_acc)

>>>

Train_acc: 0.9657494708485664

Test_acc: 0.9576923076923077

📍 모델 구조 확인

import matplotlib.pyplot as plt

import sklearn.tree as sk_tree

plt.figure(figsize=(12, 8))

sk_tree.plot_tree(pipe["clf"], feature_names=X.columns,

rounded=True,

filled=True)

plt.show()

반응형

'zero-base 데이터 취업 스쿨 > 스터디 노트' 카테고리의 다른 글

| [스터디 노트] Week12_2일차 [4 ~ 5] - ML (1) | 2023.10.11 |

|---|---|

| [스터디 노트] Week12_1일차 [1 ~ 3] - ML (1) | 2023.10.10 |

| [스터디 노트] Week10_3일차 [기본] - 통계학 (1) | 2023.09.11 |

| [스터디 노트] Week10_2일차 [기본] - 통계학 (0) | 2023.09.11 |

| [스터디 노트] Week10_1일차 [기본] - 통계학 (2) | 2023.09.11 |